A Family History Month experiment – search millions of name records from GLAM organisations

There’s a lot of rich historical data contained within the indexes that Australian GLAM organisations provide to help people navigate their records. These indexes, often created by volunteers, allow access by key fields such as name, date or location. They aid discovery, but also allow new forms of analysis and visualisation. Kate Bagnall and I wrote about some of the possibilities, and the difficulties, in this recently published article.

Many of these indexes can be downloaded from government data portals. The GLAM Workbench demonstrates how these can be harvested, and provides a list of available datasets to browse. But what’s inside them? The GLAM CSV Explorer visualises the contents of the indexes to give you a sneak peek and encourage you to dig deeper.

There’s even more indexes available from the NSW State Archives. Most of these aren’t accessible thought the NSW government data portal yet, but I managed to scrape them from the website a couple of years ago and made them available as CSVs for easy download.

It’s Family History Month at the moment, and the other night I thought of an interesting little experiment using the indexes. I’ve been playing round with Datasette lately. It’s a fabulous tool for exploring tabular data, like CSVs. I also noticed that Datasette’s creator Simon Willison had added a search-all plugin that enabled you to run a full text search across multiple databases and tables. Hmmm, I wondered, would it be possible to use Datasette to provide a way of searching for names across all those GLAM indexes?

After a few nights work, I found the answer was yes.

Try out my new aggregated search interface here!.

(The cloud service it uses runs on demand, so if it has gone to sleep, it might take a little while to wake up again – just be patient for a few seconds.)

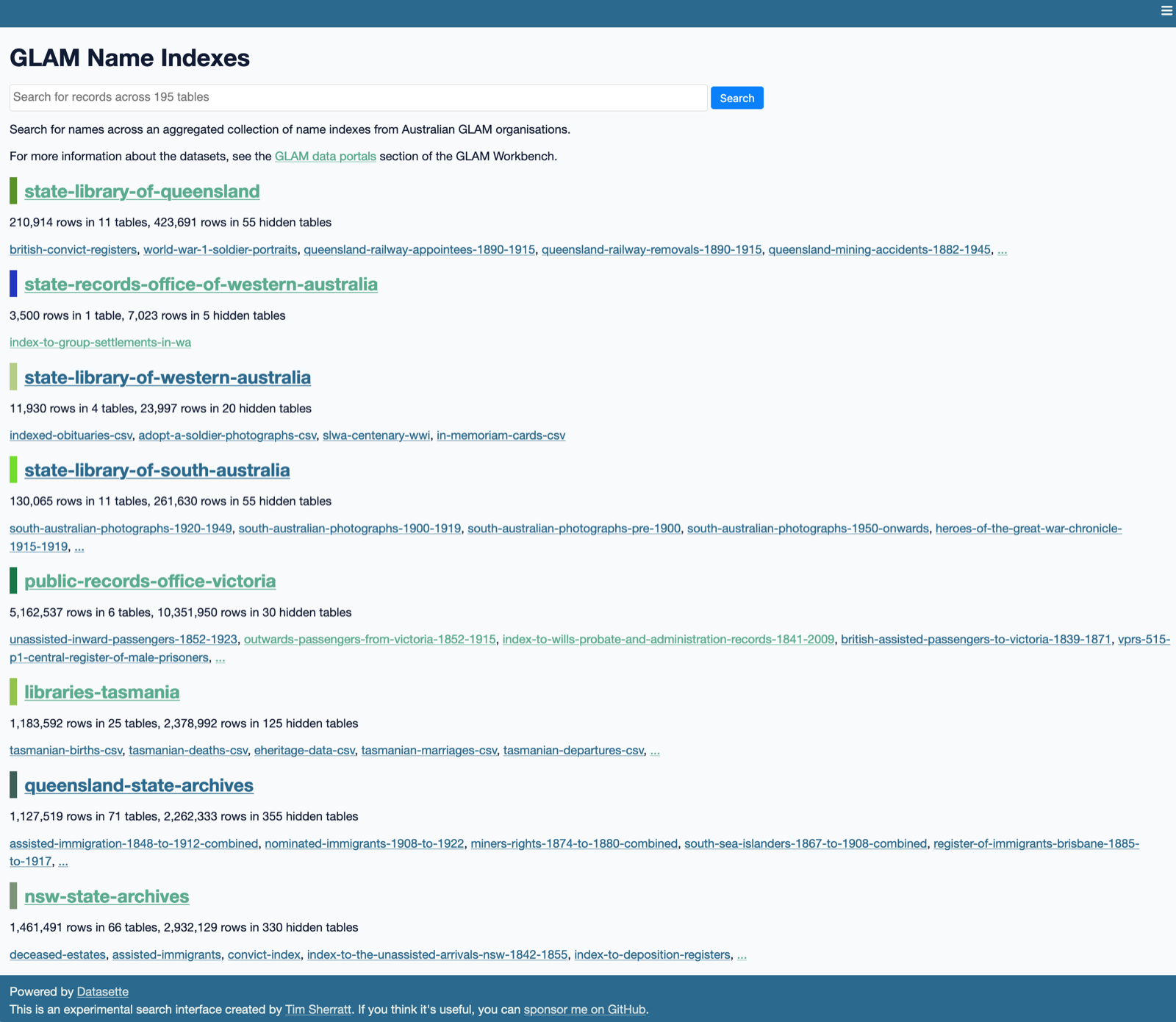

Currently, the GLAM Name Search interface lets you search for names across 195 indexes from eight GLAM organisations. All together, there’s a total of more than 9.2 million rows of data to explore!

It’s simple to use – just enter a name in the search box and Datasette will search each index in turn, displaying the first five matching results. You can click through to view all results from a specific index. Not surprisingly, the aggregated name search only searches columns containing names. However, once you click through to an individual table, you can apply additional filters or facets.

To create the aggregated search interface I worked through the list of CSVs I’d harvested from government data portals to identify those that contained names of people, and discard those that contained administrative, rather than historical data. I also made a note of the columns that contained the names so I could index their contents once they’d been added to the database. Usually these were fields such as Surname or Given names, but sometimes names were in the record title or notes.

Datasette uses SQLite databases to store its data. I decided to create one database for each GLAM organisation. I wrote some code to work through my list of datasets, saving them into an SQLite database, indexing the name columns, and writing information about the dataset to a metadata.json file. This file is used by Datasette to display information such as the title, source, licence, and last modified date of each of the indexes.

Once that was done, I could fire up Datasette and feed it all the SQLite databases. Amazingly it all worked – searching across all the indexes was remarkably quick! To make it publicly available I used the Datasette publish to push everything to Google CloudRun (about 1.4 gb of data). The first time I used CloudRun it took some time to get the authentication and other settings working properly. This time was much smoother. Before long it was live!

Once I knew it all worked, I decided to add in another 59 indexes from the NSW State Archives. I also plugged in a few extra indexes from the Public Record Office of Victoria. These datasets are stored as ZIP files in the Victorian government data portal, so it took a little bit of extra manual processing to get everything sorted. But finally I had all 195 indexes loaded.

What now? That depends on whether people find this experiment useful. I have a few ideas for improvements. But if people do use it, then the costs will go up. I’m going to have to monitor this over the next couple of months to see if I can afford to keep it going. If you want to help with the running costs, you might like to sign up as a GitHub sponsor.

And please let me know if you think it’s worth developing! #dhhacks