From 48 PDFs to one searchable database – opening up the Tasmanian Post Office Directories with the GLAM Workbench

A few weeks ago I created a new search interface to the NSW Post Office Directories from 1886 to 1950. Since then, I’ve used the same process on the Sydney Telephone Directories from 1926 to 1954. Both of these publications had been digitised by the State Library of NSW and made available through Trove. To build the new interfaces I downloaded the text from Trove, indexed it by line, and linked it back to the online page images.

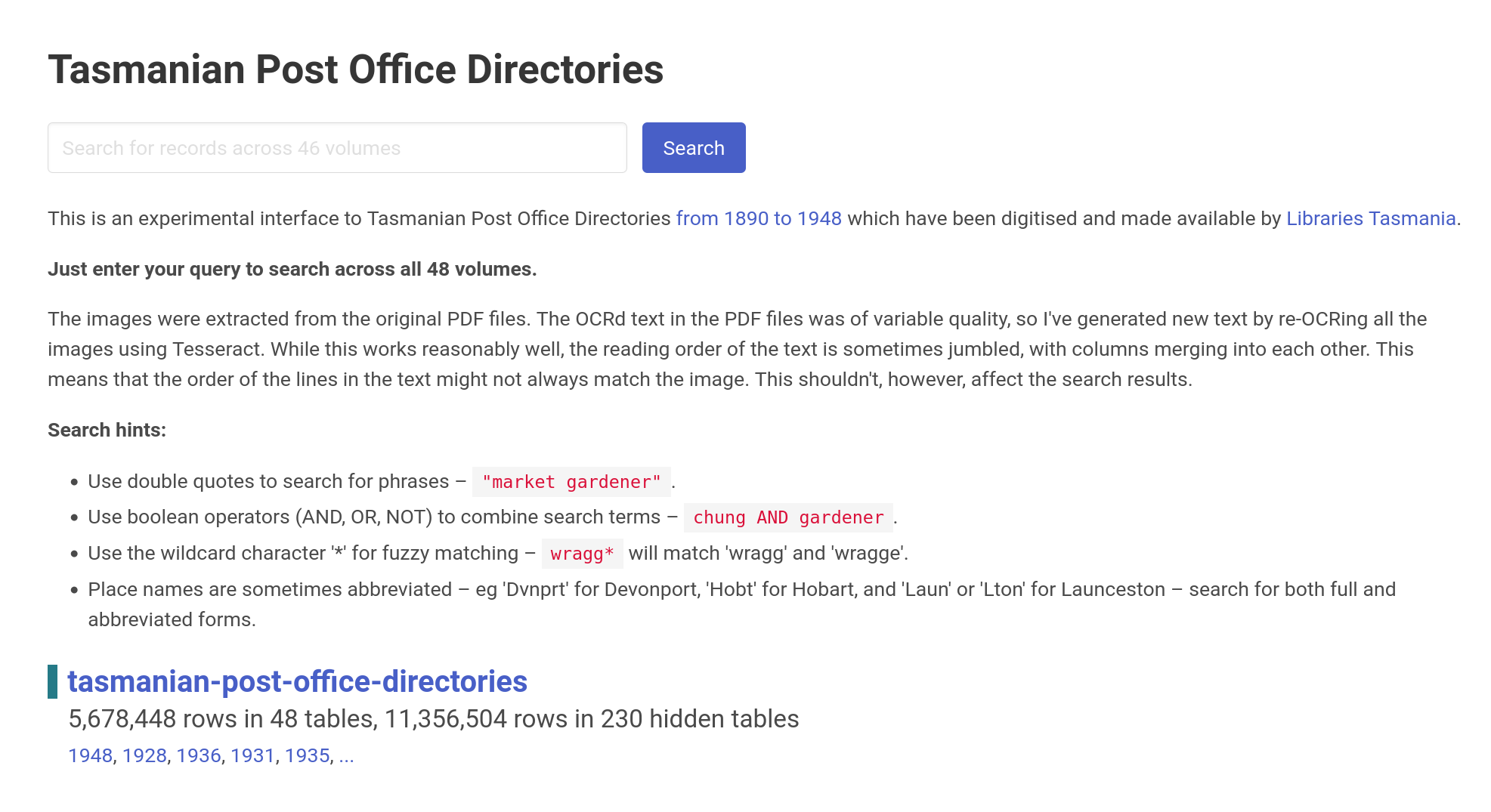

But there are similar directories from other states that are not available through Trove. The Tasmanian Post Office Directory, for example, has been digitised between 1890 and 1948 and made available as 48 individual PDF files from Libraries Tasmania. While it’s great that they’ve been digitised, it’s not really possible to search them without downloading all the PDFs.

As part of the Everyday Heritage project, Kate Bagnall and I are working on mapping Tasmania’s Chinese history – finding new ways of connecting people and places. The Tasmanian Post Office Directories will be a useful source for us, so I thought I’d try converting them into a database as I had with the NSW directories. But how?

There were several stages involved:

- Downloading the 48 PDF files

- Extracting the text and images from the PDFs

- Making the separate images available online so they could be integrated with the search interface

- Loading all the text and image links into a SQLite database for online delivery using Datasette

And here’s the result!

Search for people and places in Tasmania from 1890 to 1948!

The complete process is documented in a series of notebooks, shared through the brand new Libraries Tasmania section of the GLAM Workbench. As with the NSW directories, the processing pipeline I developed could be reused with similar publications in PDF form. Any suggestions?

Some technical details

There were some interesting challenges in connecting up all the pieces. Extracting the text and images from the PDFs was remarkably easy using PyMuPDF, but the quality of the text wasn’t great. In particular, I had trouble with columns – values from neighbouring columns would be munged together, upsetting the order of the text. I tried working with the positional information provided by PyMuPDF to improve column detection, but every improvement seemed to raise another issue. I was also worried that too much processing might result in some text being lost completely.

I tried a few experiments re-OCRing the images with Textract ( a paid service from Amazon) and Tesseract. The basic Textract product provides good OCR, but again I needed to work with the positional information to try and reassemble the columns. On the other hand, Tesseract’s automatic layout detection seemed to work pretty well with just the default settings. It wasn’t perfect, but good enough to support search and navigation. So I decided to re-OCR all the images using Tesseract. I’m pretty happy with the result.

The search interfaces for the NSW directories display page images loaded directly from Trove into an OpenSeadragon viewer. The Tasmanian directories have no online images to integrate in this way, so I had to set up some hosting for the images I extracted from the PDFs. I could have just loaded them from an Amazon s3 bucket, but I wanted to use IIIF to deliver the images. Fortunately there’s a great project that uses Amazon’s Lambda service to provide a Serverless IIIF Image API. To prepare the images for IIIF, you convert them to pyramidal TIFFs (a format that contains an image at a number of different resolutions) using VIPS. Then you upload the TIFFs to an s3 bucket and point the Serverless IIIF app at the bucket. There’s more details in this notebook. It’s very easy and seems to deliver images amazingly quickly.

The rest of the processing followed the process I used with the NSW directories – using SQLite-utils and Datasette to package the data and deliver it online via Google Cloudrun.

Postscript: Time and money

I thought I should add a little note about costs (time and money) in case anyone was interested in using this workflow on other publications. I started working on this on Sunday afternoon and had a full working version up about 24 hours later – that includes a fair bit of work that I didn’t end up using, but doesn’t include the time I spent re-OCRing the text a day or so later. This was possible because I was reusing bits of code from other projects, and taking advantage of some awesome open-source software. Now that the processing pipeline is pretty well-defined and documented it should be even faster.

The search interface uses cloud services from Amazon and Google. It’s a bit tricky to calculate the precise costs of these, but here’s a rough estimate.

I uploaded 63.9gb of images to Amazon s3. These should cost about US$1.47 per month to store.

The Serverless IIIF API uses Amazon’s Lambda service. At the moment my usage is within the free tier, so $0 so far.

The Datasette instance uses Google Cloudrun. Costs for this service are based on a combination of usage, storage space, and the configuration of the environment. The size of the database for the Tasmanian directories is about 600mb, so I can get away with 2gb of application memory. (The NSW Post Office directory currently uses 8gb.) These services scale to zero – so basically they shut down if they’re not being used. This saves a lot of money, but means there can be a pause if they need to start up again. I’m running the Tasmanian and NSW directories, as well as the GLAM Name Index search, within the same Google Cloud account, and I’m not quite sure how to itemise the costs. But overall, it’s costing me about US$4.00 a month to run them all. Of course if usage increases, so will the costs!

So I suppose the point is that these sorts of approaches can be quite a practical and cost-effective way of improving access to digitised resources, and don’t need huge investments in time or infrastructure.

If you want to contribute to the running costs of the NSW and Tasmanian directories you can sponsor me on GitHub.